Bells AI

How does the AI in Bells AI work?

Bells uses Natural Language Processing (NLP) to understand the structure of what was written in the note. Based on the content's structure and configuration the organization makes, Bells shows recommendations for the user to decide whether or not to accept.

In addition to the recommendations, Bells uses NLP and Machine Learning (ML) to determine if a note is too similar to a previous note. This again is controlled by configuration, both for enabling the ability, as well as setting the threshold of what is too similar.

Below is a list of the AI technology used in Bells, and which AI features are using it:

Natural Language Processing (NLP): Used to understand the clinical documentation language and make recommendations

Machine Learning (ML): Used in note audits (similarity detection) and prediction models (no show)

Generative Artificial Intelligence (GenAi): Used to generate patient summaries based on prior clinical documentation

Generative Artificial Intelligence (GenAi)/Retrieval Augmented Generation (RAG): Used to allow for natural language queries for a patient or whole population

NLP/ML/GenAi: Ambient Listening

Optical Character Recognition (OCR): Used to translate images into text

NLP/ML: Used to translate native languages into English

Does Bells connect to external vendors or sources for AI?

Bells does not use external sources (like ChatGPT) for it's AI. All of our AI capabilities are housed within Bells.

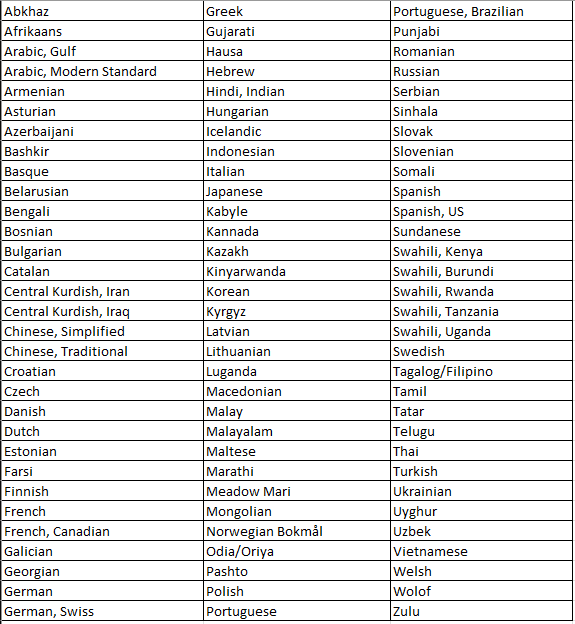

What languages does the Native Language Translation support in Bells?

As of December 2023, Bells supports the translation of the following languages to English: